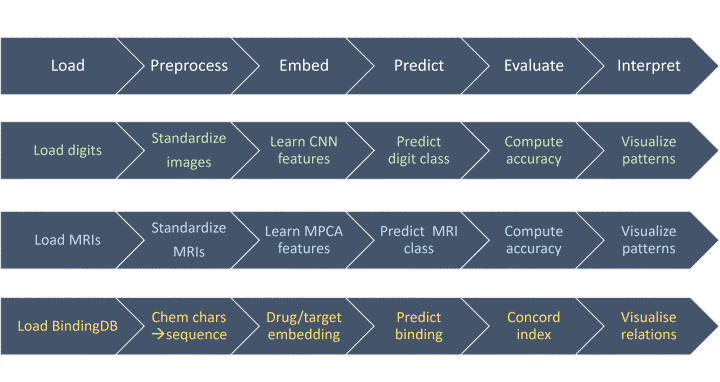

PyKale is a library in the PyTorch ecosystem aiming to make machine learning more accessible to interdisciplinary research by bridging gaps between data, software, and end users. Both machine learning experts and end users can do better research with our accessible, scalable, and sustainable design, guided by green machine learning principles. PyKale has a unified pipeline-based API and focuses on multimodal learning and transfer learning for graphs, images, texts, and videos at the moment, with supporting models on deep learning and dimensionality reduction.

PyKale enforces standardization and minimalism, via green machine learning concepts of reducing repetitions and redundancy, reusing existing resources, and recycling learning models across areas. PyKale will enable and accelerate interdisciplinary, knowledge-aware machine learning research for graphs, images, texts, and videos in applications including bioinformatics, graph analysis, image/video recognition, and medical imaging, with an overarching theme of leveraging knowledge from multiple sources for accurate and interpretable prediction.